Toolkit

Guiding questions

For the collection and processing of their data, project managers should consider the following questions:

- How will citizen scientists be involved in your data collection and analysis?

- What support do citizen scientists need to engage with the data process in different ways, and how will this be provided?

- Have you completed a data management plan?

- How will you collect / store / process data? Are you planning on publishing your data? Where? How?

- Are you using any personal data, and if so, how do you comply with legal requirements such as the GDPR?

- How will you ensure data quality?

- How will you analyse your data? What will you do with the results of your analysis?

Data collection & analysis

In the data collection and data analysis stages, projects implement the methodology they have defined previously to acquire, curate, process, analyse and interpret their data.

Data in citizen science can be many things, and there is no one definition of it. For the purpose of this toolkit, we understand data to be the pieces of information collected for the purpose of generating insight. Depending on the project, data could consist of images, observations, descriptions, categorisations, physical samples, audio files, or a variety of other details. A dataset is a collection of data, and metadata is data about a dataset, which describes its properties, such as the title or description, who collected it, how it is licensed, etc.

Different kinds of data are typical for the different types of projects:

- In grass-roots citizen science, projects are often very local and collect data in a specific area, such as air quality measurements from sensors in homes or details about products used in the household. For Citicomplastic, data consisted of photos of compost, a measurement of the temperature, and description of its consistency and smell, taken every week. It was then analysed to demonstrate that home composting bioplastic was not feasible.

- With longer research projects where data is collected over long periods of time it is important that data is in a highly standardised format which allows it to remain comparable. For De Vlinderstichting, data consists of reported counts of butterflies and dragonflies from each walk of each participant on each of their transects in the whole of the Netherlands. This data is used by the national government to monitor species and environmental impacts of policies over time, and highlight urgent issues. Participants also collect water samples, which are frozen and sent to a laboratory for analysis, to identify pollutants. For Street Spectra, data consists of photos taken by participants with mobile phones and a spectrograph; they are submitted with metadata on the location and comments, such as the type of lamp as identified by the participant.

- For educators, data is not so much the driving force for the projects, as for the participants themselves, who collect and analyse it in order to learn about science, and understand a specific issue. For Students, air pollution and DIY sensing, data consists of measurements collected by students with their own air pollution sensors. They analyse it based on their own research design to understand the issue of air pollution in their environment.

- In online projects, data can be anything that can be processed digitally: Images that are submitted, or classifications of images in a variety of contexts; observations of species, or stars; transcriptions of texts, or descriptions of items. For Restart data workbench, data consists of records of repairs from their workshops, which is then analysed to approximate the environmental impact of those repairs, and drive policy on repairability of products.

TOOLS

Tools for data collection

These tools, developed and/or used by ACTION, can help projects gather the data they need.

- Coney is a survey tool designed to enhance the user experience when responding to surveys, with a conversational approach: on the one hand, Coney allows modelling a conversational survey with an intuitive graphical editor; on the other hand, it allows publishing and administering surveys through a chat interface. Users can define a graph of interaction flows, in which the following question depends on the previous answer provided by the respondent. This offers a high degree of flexibility to survey designers that can simulate a human to human interaction, with a storytelling approach that enables different personalised paths. We provide further guidance on how to use Coney here.

- Epicollect is an easy-to-use mobile application, which allows citizens to design their own forms to collect data, taking advantage of mobile functionalities such as geolocation, camera images, accelerometer, etc.

- The Virtual City Explorer is a web-based tool that allows projects to collect data about static infrastructure items in cities, by asking contributors to explore 3D environments on a page embedded from Google Street View.

- The Making Sense project has developed a Citizen Sensing Toolkit, including a wealth of activities for the use of sensors and other data collection activities in citizen science projects.

ACTION data webinars

The ACTION team has hosted several webinars on data processing:

- Webinar on the data lifecycle, which explains open data, open science, and the best way for CS projects to publish their data.

- Webinar on data protection and processing, which explains how CS projects can work with data while complying with the GDPR.

Webinar on data management with data management plans and data quality assurance.

CASE STUDIES

Street Spectra

Citizen scientists in Street Spectra were primarily engaged in data collection activities. The project provides them with a spectrograph, which they hold in front of their mobile phone camera to take photos of light spectra of street lights when they are out and about. These photos are then uploaded to the projects’ database through a mobile app (Epicollect), together with some metadata collected from participants’ mobile phone, such as the date and time, and their location. The data is published directly onto a public database. The project team had planned to engage participants continuously in this data collection. However, the national lockdown in Spain in 2020 prevented all kinds of public outreach and educational activities. In the meantime, they decided to include citizens in data classification, by using the Zooniverse online platform, leading them to have two parts of the project to manage on two different platforms. Images and associated metadata had to be somehow copied from Epicollect5 to Zooniverse. During 2021, work was done to coordinate the usage of these two platforms by means of an IT infrastructure deployed by the ACTION consortium itself. The tool selected for the job was Apache Airflow, which allows to define workflows between IT systems. The tool by itself was not enough and had to be supplemented through custom developed connectors to interact with Epicollect and Zooniverse.

Data Management

ACTION recommends projects adhere to open science and the FAIR data principles. Open science commonly refers to efforts to make research outputs more widely accessible. Especially where this science is publicly funded, its results should be publicly available, so they can benefit further research, innovation, or citizens directly. Open Science also increases media attention, citations, collaborations, job opportunities and research funding (McKiernan et al., 2016).

The FAIR principles are designed to make data more widely usable, including machine-usable. They are good practice for publishing data in any context, including citizen science. The principles are:

- Findability: Data should be published with persistent identifiers (such as a URL), and include comprehensive metadata.

- Accessibility: Once found, both data and metadata should be easy and free to access, though authentication may be necessary.

- Interoperability: It should be possible to integrate the data with other data sources through common schemas, and to process the data with common applications.

- Reusability: Data should be exhaustively described and licensed to enable reuse.

In line with best practice from open science, the openness and availability of data should be considered throughout the project and should guide many of the data collection, analysis and dissemination decisions.

TOOLS

Data Management Plan Tool

This tool helps you to generate a Data Management Plan. It is based on a questionnaire, complemented with a chatbot for non expert users. We also provide a tutorial for the use of the tool.

CASE STUDIES

Noise Maps

Noise Maps collects sound samples from both residential and public buildings, as well as guided walks. The data was collated by project host BitLab, who, together with researchers from their partner university, developed an automated data pipeline that processed all the raw sound data to generate train AI models to automatically detect different types of sounds in the recordings: cars, machinery, bird songs, etc., which together formed the soundscape of the neighbourhoods of Barcelona where the samples were recorded. Any human voices on the recordings were obscured, to protect the privacy of bystanders and participants. All data was uploaded to Freesound, a free, public repository of sound samples, from where it was visualised on maps, and can be used by other interested parties.

When collecting or working with data, projects should take special care to consider how they use personal data. This could simply mean details of their participants, which need to be stored safely; or data collected by participants, which may include location / GPS details. Any data that refers to a natural, living, identifiable person falls under the remit of the GDPR – the European General Data Protection Regulation. It doesn’t really matter what happens with this data – whether it is only stored for safekeeping or used for analysis, the same principles apply. If the project controls the data, it (or its host organisation) will be considered as the data controller, which means they are responsible for ensuring that the data is processed in line with legal requirements. The main mechanism that allows projects to process data lawfully is the consent of the data subjects: Participants explicitly agree to their data being stored or used for a specific purpose (usually the participation in or contributions to the project). All details about which and how personal data is used should be captured in a data management plan.

Projects should complete a data management plan – however provisional – as early as possible. A data management plan describes the lifecycle of the data, and includes a summary of the data, its origin and format, how it maps onto the FAIR principles, how it is stored, processed and protected, and whether and how any potential ethical issues with the data are dealt with. The plan will help to understand what data is needed, how it is stored, what protection mechanisms are required for any personal data, and where and how the data is going to be published. It should be updated or replaced as necessary throughout the project’s lifetime.

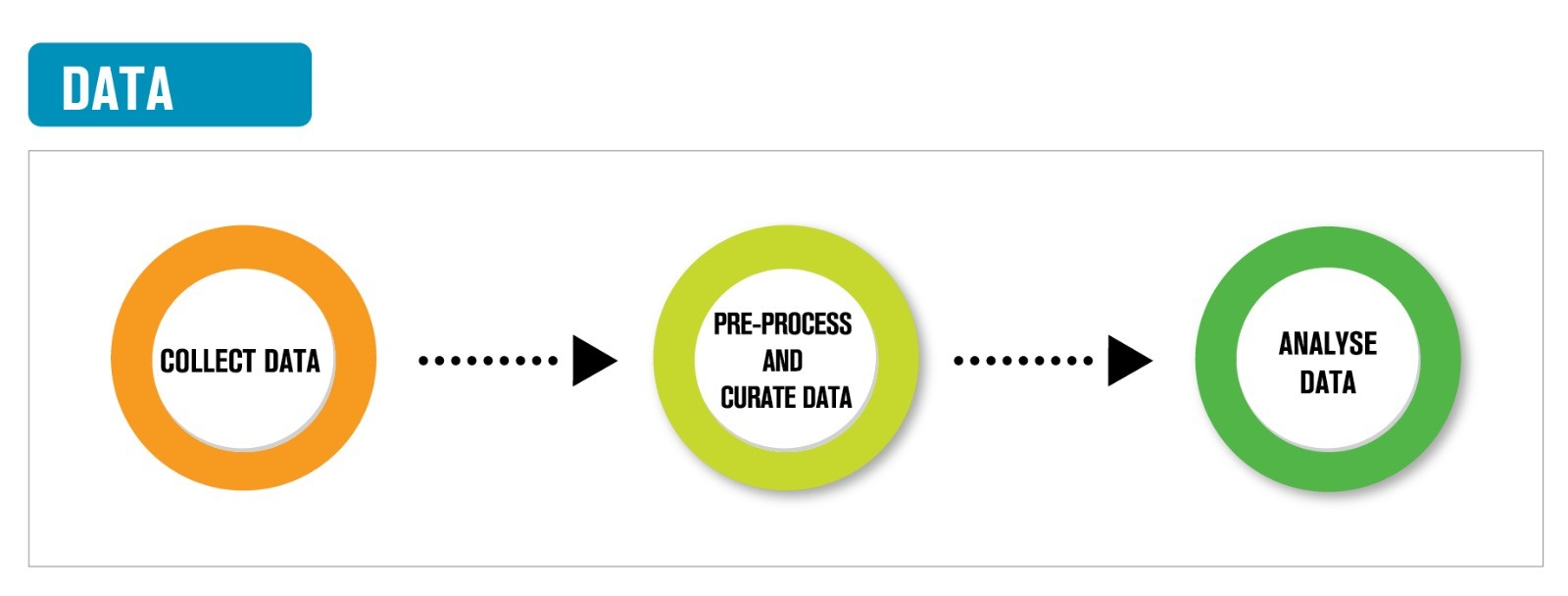

There are four main steps that citizen science projects must take to gather and analyse their data. There are additional data management principles associated with visualising and publishing results, which we discuss further under Results.

- Data collection: First, projects need to identify the data they require, and where it can be collected. Data can be created by sensors, such as air pollution or sound sensors which citizen scientists operate, or by citizen scientists, for example when they record or categorise observations. When citizen scientists create the data, platforms like Epicollect are often used to collect it. A mini tutorial (see next section) is available to support projects in creating projects on both platforms. During data collection, projects need to take special care to ensure their process is compliant with data protection regulations, especially where personal data (such as contact details of participants) is involved.

- Data preprocessing: After data is collected and available to the project, it may need to be cleaned, to remove ‘noise’, or invalid data, and ensure the collated data is in a format that can be used for analysis. Typically, data cleansing is necessary to identify and correct (i) intrinsic errors made by the sensors used to collect the data (e.g. GPS positions of mobile phones might be of low quality when there was a poor connection); or (ii) incomplete submissions or outliers, when data was collected by participants, which might affect the quality of the further analysis (e.g., poor-quality answers in surveys).

- Data aggregation: Next, CS projects need to coherently group the data they collected. This is particularly relevant for classification projects. For example, in Street Spectra, users have to identify the spectra emitted by lampposts. For this purpose, they created a Zooniverse project to classify the different spectra. After a number of responses, the project is faced with a set of different values, and has to decide which is the correct one. There are a number of techniques to determine this, such as majority voting (which option has more votes) or the use of the Fleiss Kappa statistic. Another example are locations, for example of lampposts. Citizen scientists may generate this information, but submit different positions (latitude and longitude). It is necessary to reconcile these observations into a single one. In this cases, it is worth identifying if the different positions marked reference to the same lamppost; the position could then be reconciled by reducing the precision of the observations (removing some decimals).

- Data analysis: The data analysis is the core part of a CS project, where the collected data are examined to try and extract high level information out of them, and ultimately respond to the research questions set out at the beginning. Prior experience or external expertise can be particularly helpful during data analysis, since solid knowledge of the methods and their practical application can speed up the analysis itself, and reduce the probability of errors. However, citizen scientists may also want to understand and be able to analyse the data for themselves. In many CS projects, data analysis can also include analysing the contributions by citizen scientists. For example, projects could investigate the number of errors a contributor made with respect to some set standards, or focus on inter-annotator agreement, to measure how well a group of annotators can make the same annotation decision for a certain category.

Data quality

Alongside the above data processing, citizen science projects should consider the quality of their data, as poor quality data cannot satisfy the purpose for which it was collected. To ensure the quality of their data, projects need to understand what could affect it. This could be very obvious (e.g., training citizen scientists to make them familiar with data collection protocols), or issues with the data could be discovered during data collection (e.g., evaluation scales are too subjective and data collected by different citizen scientists are not comparable).

Our own studies highlight that some indicators are more frequent in CS projects, such as completeness (for geographical coverage, task/observation, number of functioning sensors/sampling), accuracy (for equipment, expert’s acceptance, instrument calibration), timeliness (for time frame, scale, resolution, etc.) and consistency (depending mostly on volunteers’ preparation). We identified suggestions on the most common causes of bad data quality in citizen science initiatives, and data quality improvement activities that were applied across projects, despite the different topics they covered. They included the improvement in the volunteers’ training, sensors or toolkits’ instruments and manuals, constant review of data acquisition activity, improvement in internal communication, integration of activities from different volunteers, increased acquisition in uncovered areas, etc. (Baroni et al., 2022).

Another important aspect in data quality assurance are the dimensions to be considered, such as the completeness, accuracy, timeliness, consistency, and accessibility of the data. Projects should consider which dimensions are relevant for them depending on the nature of their data. It is good practice to define indicators for each dimension and measure them, to check whether there are any issues. If issues are found, ad-hoc activities can be designed to improve data quality. ACTION created a template to help citizen science projects to analyse data quality and to improve it.

TOOLS

Data quality resources

Data Quality Assurance Template

This template is produced to guide projects to continuously check their data quality throughout their lifetime. It offers instruments to evaluate possible causes of low quality in data, a way to create ad hoc indicators and how to measure them, and a list of activities to improve the indicators.

Data Quality Resource Compendium

Developed by a specialist team within the Citizen Science Association, this compendium offers a wealth of guidance documents, manuals, and workbooks for quality control and assurance in citizen science projects. Each entry provides a link to the resource, information about the authors and intended audience, and which aspects of the data management cycle are addressed.

GUIDELINES & RECOMMENDATIONS

GDPR Checklist

You can use this checklist to confirm whether your use of data conforms to the European General Data Protection Regulation. The website includes a wealth of information on the use and protection of data.

CASE STUDIES

Tatort Streetlight

Citizen scientists in Tatort Streetlight are asked to collect and identify insects. The collections are taken using insect traps, and citizen scientists also collect associated metadata, such as the time of the collection (start and end date), type of the trap (emergence trap for emerging insects from water bodies, eclector traps for flying insects at street lamps, or light traps for flying insects attracted to UV light) and the location (one of four study areas or an experimental field site). Citizen scientists use these collections to identify the specimen based on their insect order. The sorted specimens are stored in separate vials. These activities are also used for education within workshops, supervised by project coordinators, to present the differences of insect orders and biodiversity caught at street lights to students. Citizens science experts further identify the insect family or species. To identify the insects remotely, e.g. at workshop facilities or if they took parts of the insect collections to their private homes, citizen scientists can use an epicollect project or a handwritten template. So far, the entomologists and workshop groups preferred to use the templates, but as the participation is growing the Epicollect platform will be a useful tool.

Restart data workbench

The Restart Data Workbench project works on data that is collected by volunteers, in repair workshops across Europe. Citizen scientists are engaged in online microtasks, to classify the types of faults in selected products. Restart had already worked with microtasks for citizen science engagement, to see whether people that have had other levels of engagement would transition to those tasks, or whether they could engage new people who might not feel they have the skills to be active in a repair community per se. One challenge with this work was that the data collected at the workshops was often quite messy, which meant that it needed to be prepared for use with citizen scientists.

Restart engaged citizen scientists in classifying this data, because it allows people that are already part of the initiative to be more engaged, and use their experience to be part of the change they want to see, and become more aware of the wider implications of their engagement. Equally, it helps to improve future data collection, and increase interest in collecting more data in the community. Lastly, it is a more sustainable approach than having analysis being conducted by single professionals. Having multiple citizen scientists look at each record also enriches the validity of the data.